29 Mar DataOps design principles for building data pipelines

DataOps design principles for building data pipelines

Data is only useful when you can derive value from it. To create value from data, you need to have the right data at the right place and at the right time along with the right set of tools, and processes.

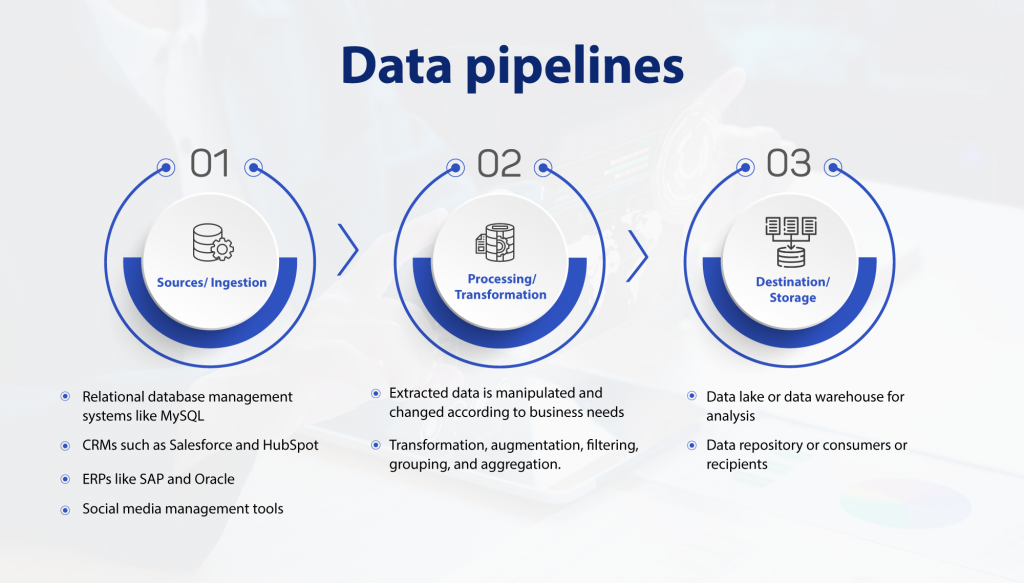

How your data is collected, modeled, and delivered is critical in transforming disparate data elements into insights and intelligence. This is where data pipelines come in.

Data pipelines help streamline the data lifecycle and ensure a seamless flow of data from sources to consumers across your organization.

DataOps and Data pipelines

DataOps is an agile approach to data management involving building, deploying, and maintaining distributed data analytics models to speed up an analytics project’s time to value. DataOps adopts practices from DevOps, lean manufacturing, and product engineering and offers collaboration between data creators and data consumers to develop and deliver analytics, in an agile-style process.

DataOps helps streamline the design, development, and maintenance of data pipelines by standardizing the process and governing how data from disparate sources are transformed into insights and intelligence.

DataOps principles for building data pipelines

DataOps focus on collaboration and flexibility to deliver value faster by improving communication, integration, and automation of data flow between data sources, managers, and consumers in the organization. Let’s get to know how DataOps principles can help in building data pipelines,

Be Agile – Build incrementally

DataOps embraces agile development methodologies to deliver data pipelines faster and with more frequent updates. This helps you perform data transformation in small, iterative steps following best engineering practices. This also allows testing individual components before incorporating them into the pipeline. Use the agile methodology to break down the project into manageable pieces, prioritize specific business processes, and apply automation in manageable segments. This approach helps to ensure reliable results before combining the components together and also ensures quick responses to changes.

Collaborate effectively

DataOps focus on communication, collaboration, integration, experience, and cooperation. To manage a growing source of data, you need to incorporate various deployment strategies and need the insights, experience, and expertise of various team members. Business owners play a critical role in data transformation as their functional domain expertise helps in designing the rules. You need to use data pipeline tools that enhance workflow and quickly send information to data users or analytical tools. To ensure collaboration, it is important to assemble a response and implementation team comprising members from different departments of your company, creating cross-functional solutions and involving everyone in the final process.

Automate whenever possible

Automation is a critical factor in DataOps success, and automated tools that support quick-change management with version control, data quality tests, and continuous integration/continuous delivery pipeline deployment can enable an iterative process, increasing team output while decreasing overhead. Automation should be implemented throughout the pipeline, from data ingestion to data processing to analytics and reporting to reduce errors and speed up delivery times.

Log and organize

Logging is crucial in data pipeline design. It allows data engineers to monitor pipeline health, troubleshoot issues, optimize performance, and aid in auditing and compliance efforts. Properly implemented logging ensures the reliability and success of a data pipeline. Storing information, fixes, and use cases of each implementation provides a clear outline for making repairs or adjustments based on past experiences, advancing the team toward its end goal through data harmonization and modeling.

Continuous improvement

DataOps is a continuous process of improving data pipelines, from design to deployment. Continuous improvement ensures that data pipelines are always up-to-date, efficient, and meeting business needs. This also involves setting up measures for continuous monitoring of data quality and performance to take appropriate actions to improve efficiency.

Standardize your data

Standardizing is important for ensuring consistency and scalability in data operations. Standardizing information flow in the data pipeline architecture creates opportunities to increase interoperability and simplify processes. Standardizing data format, quality, and processing is crucial for consistency and scalability in data operations, reducing errors and ensuring data reliability. This approach also facilitates the development and maintenance of data pipelines, allows for greater reuse of code and components, and enables easy integration into other systems. Standardized data pipelines can be shared across teams and departments, promoting collaboration and reducing duplication of effort.

Create the culture

To fully realize the potential benefits of speed and data-driven insights, it’s important to create a culture that values agile concepts and encourages experimentation and innovation. This means not only adopting agile technology but also ensuring that all team members embrace the agile mindset. A culture that prioritizes collaboration and communication can ensure that data pipelines are built with the input and expertise of all relevant stakeholders, while a culture of continuous improvement can drive the evolution and optimization of data pipelines over time. By fostering an environment that supports data-driven decision-making and innovation, companies can create a culture that supports the development and maintenance of effective data pipelines.

Scale your security

In today’s data-driven world where companies are handling large volumes of sensitive data, by integrating security into the data pipeline design, companies can ensure data security and compliance. For this, you need to embed security considerations and processes into every stage of the data pipeline, from data ingestion to data processing to analytics and reporting. The goal is to ensure that data is protected against potential threats such as data breaches, unauthorized access, and malicious attacks. Implementing security measures such as encryption, access control, and monitoring to protect the confidentiality, integrity, and availability of data. It also involves regularly testing and auditing the security measures to identify and remediate any vulnerabilities.

Adapt and innovate

Innovation plays a critical role in a data-driven world. With the rapid pace of technological change, organizations must continually adapt and innovate to remain competitive. Innovation can mean using advanced analytics and machine learning to improve data processing and analysis or implementing new technologies to streamline data pipelines or fostering a culture of experimentation. This also involves exploring new data sources, integrating new tools and platforms, and adopting new best practices to optimize performance and efficiency.

Data pipeline architecture best practices

When designing data pipelines, it is also important to follow certain best practices. Here are five best practices to follow to design a secure and efficient data pipeline architecture that will be useful in the long term.

Predictability

It should be easy to follow the path of data and trace any delays or problems back to their origin. Minimizing dependencies is crucial, as they create complex situations that make it harder to trace errors.

Scalability

Data ingestion needs can change dramatically over short periods. Establishing auto-scaling capabilities that tie into monitoring is necessary to keep up with these changing needs.

Monitoring

Monitoring is vital for end-to-end visibility of the data pipeline. By automating monitoring with specific tools, engineers can identify vulnerable points in their design and mitigate risks immediately after they occur. This monitoring also covers the need to verify data within the pipeline, ensuring consistency and proactive security.

Testing

Testing is crucial for evaluating both the architecture itself and the data quality. Experienced experts can ensure a streamlined system with fewer exploitable vulnerabilities by repeatedly reviewing, testing, and correcting data.

Maintainability

Refactor scripted components of the pipeline when it makes sense, keep accurate records, repeatable processes, and strict protocols to ensure that the data pipeline remains maintainable for years to come.

Challenges in data pipeline design

Designing a data pipeline can be a complex task, as it involves multiple processes and touchpoints. As data travels between locations, it opens the system to various vulnerabilities and increases the risk of errors. Some of the challenges that you might face during the design of data pipelines,

Increasing data sources

As organizations grow, their data sources tend to increase, and integrating new data sources into existing pipelines can be challenging. The latest data source may differ from the existing data sources, and introducing a new data source may have an unforeseen effect on the existing pipeline’s data handling capacity.

Scalability

As data sources and volumes increase, it’s common for pipeline nodes to break, resulting in data loss. Building a scalable architecture while keeping costs low is a significant challenge for data engineers.

Increasing complexity

Data pipelines consist of processors and connectors, which help transport and access data between locations. However, as the number of processors and connectors increases, it introduces design complexity and reduces the ease of implementation.

Balancing data robustness and pipeline complexity

A fully robust data pipeline architecture integrates fault detection components and mitigation strategies to combat potential faults. However, adding these to pipeline design increases complexity, which can be challenging to manage. Pipeline engineers may become tempted to design against every likely vulnerability, but this adds complexity quickly and doesn’t guarantee protection from all vulnerabilities.

Dependency on external factors

Data integration may depend on action from another organization. This dependency can cause deadlocks without proper communication strategies.

Missing data and data quality

Files can get lost along the data pipeline which may cause a significant dent in data quality. Adding monitoring to pipeline architecture can help detect potential risk points and mitigate these risks.

Overcoming the Challenges of Data Pipeline Design

Designing an efficient data pipeline involves overcoming several challenges to ensure that data is processed securely, accurately, and in real time. Some of the ways to overcome the challenges of data pipeline design:

Repurpose data warehouses and data lakes

Organizations should adopt a more agile approach to repurposing data warehouses and data lakes, making them more flexible to handle operational use cases in real-time.

Ensure privacy and security

Data security and privacy are crucial for any data pipeline. Security teams should be involved in the development process to ensure that DataSecOps are built into the foundation of the cloud transformation, reducing the risk of data exposure.

Find the right balance

There is a need to strike the right balance between people, processes, and technology in building out a DataOps pipeline. Organizations should choose the right approach for their business needs and invest in the right technology to streamline processes.

Address integration challenges

Legacy systems, databases, data lakes, and data warehouses that may lie on-premises or in the cloud can create integration challenges. Organizations should invest in tools and processes that enable smooth data flow across different systems.

Follow the Lego model to build

Breaking pipelines into smaller and more composable pieces makes it easier to manage and reduce complexity. Self-service tools should be provided to empower stakeholders to pursue their goals.

Invest in testing processes

Organizations should invest in new tools and testing processes for lower risk change management. This will help in reducing the complexity associated with pipeline management.

In conclusion

Data pipeline management is crucial for handling critical data transfers and requires dedicated resources to avoid data downtime. This includes observability, automated alert notifications, problem resolution, and change management. Reliable data pipeline operations increase data quality and trust for insight development.

Overcoming the challenges of data pipeline design requires a holistic approach that encompasses people, processes, and technology. Adopting an agile approach, investing in the right tools and testing processes, and ensuring privacy and security are some of the key steps that organizations can take to build an efficient and robust data pipeline.

Using DataOps design principles simplifies the management of multiple data pipelines from growing sources for multiple users, improving traditional approaches in agility, utility, governance, and quality. The core idea is to build flexible data processes and technology to accommodate change. Building robust data pipelines is critical to deriving value from data and you might need an expert DataOps practitioner to help you with it.

Get in touch with our data experts to implement a data pipeline architecture for your business.