30 Jun Unlocking the Power of Databricks – A guide to Migrating from Snowflake

Unlocking the Power of Databricks – A guide to Migrating from Snowflake

Data is the new oil. In today’s digital world, data is more valuable than ever before. It’s the fuel that drives businesses forward, helping them make better decisions, improve operations, and connect with customers. That’s why data management service is essential for business success. A well-managed data infrastructure allows businesses to collect, store, analyze, and use data effectively. This can lead to improved decision-making, increased efficiency, and better customer service.

Snowflake and Databricks are two powerful data management platforms that have gained significant popularity for their capabilities in handling large-scale data processing. So, how is Databricks different from Snowflake and why do organizations migrate from Snowflake to Databricks? How do you perform a Snowflake to Databricks migration? In this blog, we explore these answers and more.

Overview of Snowflake and Databricks

Snowflake is a cloud-based data warehousing platform known for its scalability, separation of storage and compute, and robust query performance. It provides a unified and secure environment for organizations to store, process, and analyze their data. On the other hand, Databricks is an integrated analytics and AI platform built on Apache Spark, offering a unified workspace for data engineering, data science, and collaborative analytics. Databricks empowers organizations with advanced analytics capabilities, real-time processing, and scalable compute resources.

While Snowflake and Databricks offer unique strengths, organizations may consider migrating from Snowflake to Databricks to leverage several advantages. Databricks provides a consolidated platform that unifies data engineering and analytics, fostering improved collaboration and streamlined processes. The advanced data science capabilities of Databricks, coupled with the power of Spark’s machine learning libraries, enable organizations to build and deploy sophisticated machine learning models. Additionally, Databricks offers a serverless execution model, Databricks Delta, optimizing data storage and compute resources, resulting in potential cost savings. Snowflake is better suited for analytical workloads, while Databricks is better suited for data engineering, data science, and machine learning workloads.

Migrating from Snowflake to Databricks can offer several benefits for customers, including:

- A unified analytics platform: Databricks provides an integrated platform for data engineering, data science, and analytics. This can help Snowflakes customers to improve collaboration and streamline processes by consolidating their data processing and analytics workflows into a single platform.

- Scalability and performance: Databricks leverages Apache Spark, a powerful distributed processing engine, to handle large-scale data processing. This means that customers can process and analyze data at scale and offer high performance.

- Advanced data science capabilities: Databricks provides built-in support for machine learning and advanced analytics. This can help customers to leverage the power of Spark’s machine learning libraries to build and deploy machine learning models.

- Cost optimization: Databricks offers a serverless execution model called Databricks Delta, which can help to optimize data storage and compute resources. This can potentially lead to cost savings.

- Real-time streaming analytics: Databricks Streaming enables real-time processing and analysis of streaming data. This can help customers to incorporate real-time analytics into their data pipelines, enabling faster insights and decision-making based on live data streams.

- Data pipeline orchestration: Databricks provides capabilities for orchestrating complex data pipelines. This can help customers to automate their data processing tasks and build end-to-end data pipelines efficiently.

- Ecosystem integration: Databricks integrates with a wide range of data sources, databases, and data tools. This can help customers to seamlessly connect and work with their existing data ecosystem.

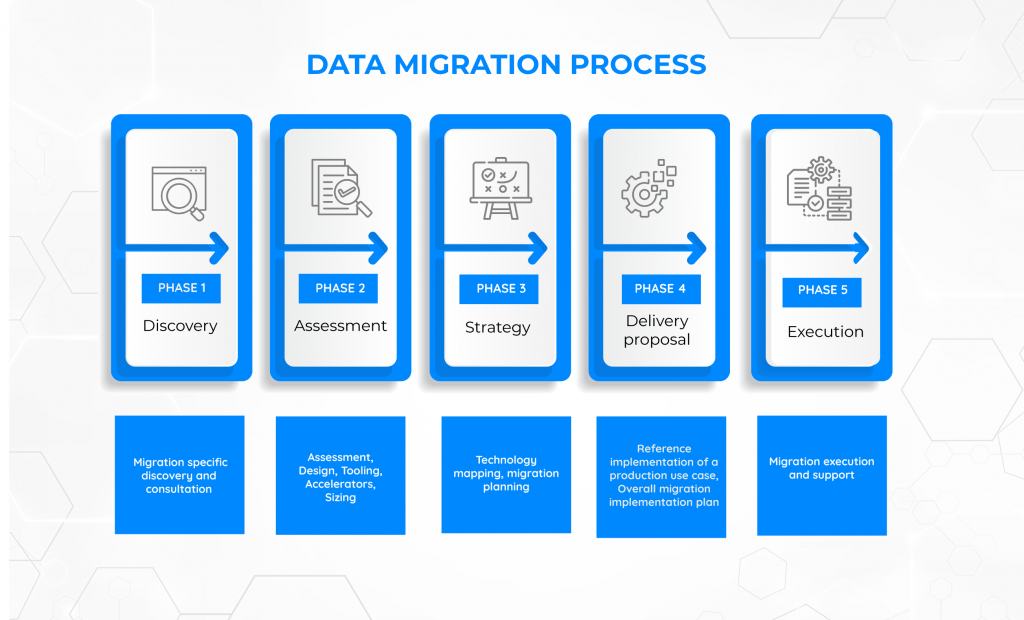

Pre-migration Considerations

Before embarking on the migration journey, careful planning and preparation are vital. Organizations should assess the compatibility of their data, identify schema mapping requirements, and define security considerations. A thorough evaluation of the feasibility and potential challenges associated with migration is crucial. You need to identify the scope of the project, including the data volumes, types, and sources involved. In this phase, you need to define a migration timeline, allocate necessary resources, identify potential risks and challenges and develop a mitigation strategy. By considering these factors upfront, organizations can ensure a smoother transition and minimize disruptions during the migration process.

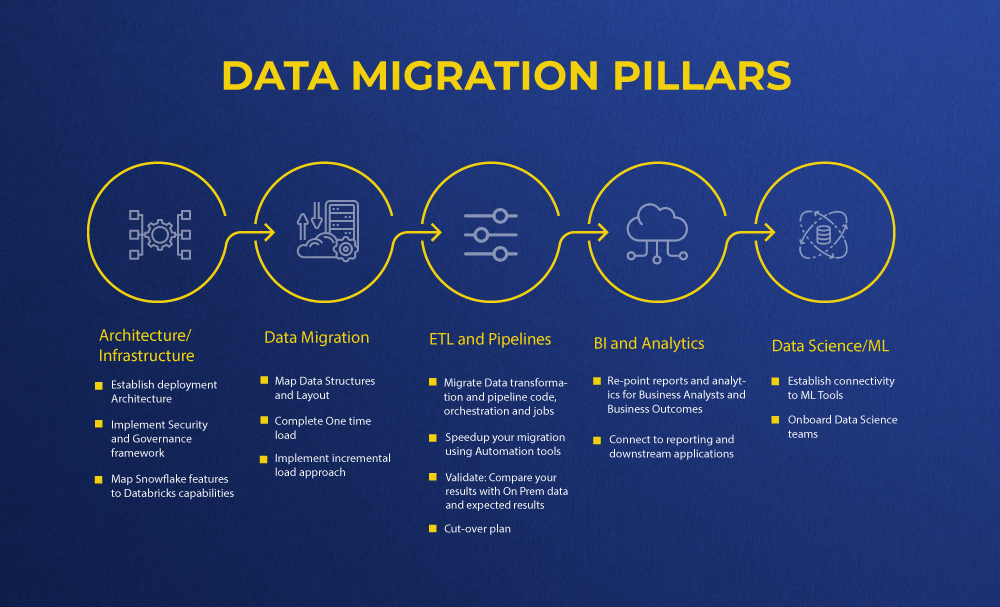

Once you have done the assessment and planning, analyze the data stored in Snowflake, including data structures, schemas, and dependencies. Then, evaluate the compatibility of the data with Databricks, considering differences in data types, formats, and conventions. This helps to identify and perform any necessary transformations or adjustments to ensure compatibility.

To create a migration strategy, you need to conduct a technical mapping to find out how various functionalities in Snowflake translate to Databricks. Here, you need to map the Snowflake schema to the corresponding schema in Databricks. Determine the mapping for tables, views, and stored procedures to be migrated. Then, ensure data mapping aligns with the target schema in Databricks.

- Data Extraction from Snowflake: Extract the data from Snowflake using appropriate methods such as Snowpipe, bulk export, or SQL queries. Ensure that the data is extracted with integrity and maintained in a consistent state throughout the extraction process.

- Data Transformation and Cleansing: Perform any necessary data transformations and cleansing to align the data with the schema and requirements of Databricks. This may include data type conversions, filtering, merging, or aggregating the data as needed.

- Data Loading into Databricks: Load the transformed data into Databricks using suitable mechanisms such as Databricks File System (DBFS), Delta Lake, or Spark connectors. Optimize the loading process for performance and scalability, considering factors such as partitioning, parallelism, and data compression.

- Testing and Validation: Validate the migrated data in Databricks to ensure its accuracy and consistency. Perform data quality checks, compare the migrated data with the source data in Snowflake, and validate against expected results. Conduct thorough testing to identify any discrepancies or data integrity issues.

- Code Migration and Integration: Migrate any custom code, scripts, or queries from Snowflake to Databricks. Adapt the code to utilize the Databricks ecosystem, including leveraging Apache Spark for data processing and analytics. Ensure that the migrated code functions correctly and performs optimally in the Databricks environment.

- Performance Optimization: Optimize the performance of the migrated data and code in Databricks. Utilize Databricks’ scalability and distributed processing capabilities to enhance data processing speed and efficiency. Fine-tune queries, leverage caching, and optimize resource utilization for improved performance.

- Monitoring and Maintenance: Establish monitoring mechanisms to track the performance, usage, and stability of the migrated data in Databricks. Implement appropriate logging, alerting, and diagnostics to identify and address any issues promptly. Regularly maintain and update the migrated data and associated processes as needed.

- User Training and Adoption: Provide training and support to users who will be working with the migrated data in Databricks. Familiarize them with the new environment, tools, and functionalities. Encourage user adoption and collaboration to maximize the benefits of migration.

Snowflake to Databricks migration best practices

When migrating from Snowflake to Databricks, it is important to follow best practices to ensure a successful transition. Here are some recommended practices for a Snowflake to Databricks migration:

Adopt a phased and agile approach: It is best to use a phased agile approach to Databricks migration. Instead of simply lifting and shifting from Snowflake to Databricks, focus on modernizing to the lakehouse architecture. Balance the lift and shift of code and applications with immediate modernization using optimal Databricks patterns.

Balance lift and shift with modernization: While lift and shift can be a quick way to move code and applications, it is essential to simultaneously modernize and apply best practices. Use automated code converters to facilitate the lift and shift process, and immediately apply optimal Databricks patterns and best practices to modernize the code.

Learn, iterate, and improve: Treat migration as an iterative process. Learn from each iteration, assess what worked and what didn’t, and continuously improve. Add additional use cases and workloads gradually as you gain experience and confidence in the new environment.

Show success in shorter sprints: Break the migration process into smaller, manageable sprints to demonstrate success to stakeholders more quickly. By showing incremental progress and achieving tangible outcomes in each sprint, you build confidence and gather feedback that can inform improvements for the subsequent iterations.

In Conclusion

By migrating to Databricks, organizations can modernize their data architecture and unlock new possibilities for data processing and analytics, driving success in the data-driven era. But data leaders have various concerns around cost, learning curve, and ecosystem breadth when migrating to Databricks. To navigate this transition seamlessly and optimize the data ecosystem, partnering with a trusted Databricks partner like Nuvento is essential. With Nuvento’s expertise, organizations can minimize costs, accelerate the learning process for data teams, and leverage Databricks’ advanced analytics capabilities. To unlock the power of your business data with Databricks, talk to a data expert at Nuvento, now.